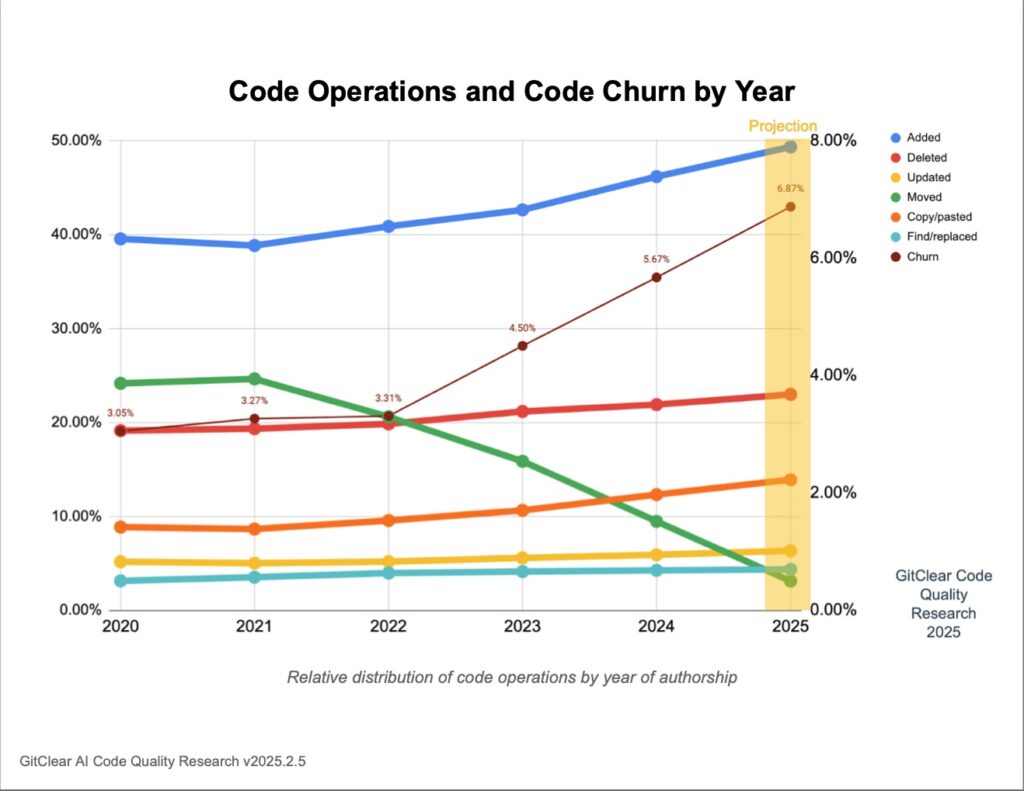

New research from GitClear, based on analysis of 211 million lines of code, suggest that AI coding assistants are eroding code quality by increasing duplicated and copy/pasted code and decreasing refactoring.

GitClear analyzed code from customers of its own code review tools as well as open source projects, looking at metrics for code changes such as adding, deleting, moving and updating. The researchers found that the number of code blocks with 5 or more duplicated lines increased by 8 times during 2024. Duplicated code may run correctly, but is often a sign of poor code quality since it adds bloat, suggests lack of clear structure, and increases risk of defects when the same code is updated in one place but not in others. GitClear adds that functions which are called from many different places, as opposed to copy/pasted, are more “battle-tested”.

The researchers also noted a 39.9 percent decrease in the number of moved lines. When code is moved, it is evidence of refactoring, which is the business of improving code quality without changing its function. According to GitClear, the ability to “consolidate previous work into reusable modules” is an essential advantage that human programmers have over AI assistants. 2024 was the first year when the number of copy/pasted lines exceeded the number of moved lines.

The reason, according to GitClear, is that code assistants make it easy to insert new blocks of code simply by pressing the tab key. It is less likely that the AI will propose reusing a similar function elsewhere in the code, partly because of limited context size, meaning the amount of surrounding code that is used for the AI suggestions.

In contrast to GitClear’s report, Google’s 2024 DORA “State of DevOps” report claimed that increased AI adoption had improved code quality by 3.4 percent; yet the DORA report also identified “an estimated reduction in delivery stability by 7.2 percent”. It turns out that the two reports are not as different as they seem. At the individual code block level, AI assistance might improve code quality; but the DORA researchers say that since AI allows developers to deliver more code more quickly, it is likely that more changes are needed later, and their research shows that larger changes are “slower and more prone to creating instability.”

The impact of AI on coding can be spun in different ways. Advocates (and AI vendors) can point to increased productivity, something which most developers confirm, while sceptics can show the detrimental impact on code maintenance.

It is easy to say, as Google does, that organizations should establish guidelines for use of AI to address concerns; but tools which encourage bad practice will inevitably increase bad practice until they are improved.